2025 did not just move fast — it changed the rules.

AI stopped being a clever assistant and started acting more like a strategic partner. Quantum computing edged closer to solving problems that once felt untouchable. And sustainability? It is no longer a side project. It is the lens through which many decisions are made.

The question is: how do leaders quickly turn these shifts into advantage before the next wave hits?

Generative and Agentic AI: From Creation to Autonomy

Generative AI was the headline act last year. Today, it is a warm-up. The real story is agentic AI — systems that do not just respond but act, setting goals and making decisions within defined boundaries.

Microsoft’s move toward Humanist Superintelligence (HSI), led by Mustafa Suleyman, signals a profound shift. As Suleyman puts it:

“Creating superintelligence is one thing. Creating provable, robust containment and alignment alongside it is the urgent challenge facing humanity in the 21st century.”

This is not about racing for Artificial General Intelligence (AGI). It is about building AI that serves rather than leads: — augmenting human judgment and not replacing it. Think of it like hiring a brilliant strategist who never forgets who is in charge.

And the market agrees. Microsoft introduced Agent Mode and Office Agent in Microsoft 365 Copilot, enabling “Vibe Working” — workflows where AI orchestrates tasks across teams. Meanwhile, Palantir’s Q3 results show how deeply AI is embedded into enterprise operations. CEO Alexander Karp spoke plainly: AI is not just a feature,; it is the foundation of future business models.

Key takeaway for leaders:

AI is becoming an operational layer. Governance and alignment are not optional. They are competitive differentiators.

Quantum Computing and 5G: Speed Meets Scale

Quantum computing moved from theory to practical pilots in 2025. Drug discovery, coordination optimization, and cryptography saw real advancements due to this technology. These breakthroughs are not just academic milestones. They are opening doors to real-world applications that traditional computing could never handle.

Pair that with 5G expansion, and you have the backbone for next-generation IoT, AR/VR, and distributed edge computing. Real-time, high-speed connectivity is not faster for downloads. It enables autonomous vehicles, immersive training environments, and predictive maintenance at scale. Think of it as upgrading from a two-lane road to a superhighway, — and then adding self-driving cars.

The enterprise’s appetite for this infrastructure is clear. Google Cloud racked up $1 billion (about $3.1 per person in the US) in AI-powered deals this quarter, driven by customers demanding platforms that can handle advanced AI workloads and real-time data flows. Sundar Pichai credited “larger deals, more customers, and deepening AI relationships” for this surge. It’s a signal that speed, scale, and differentiation are now strategic imperatives.

Why does this matter for leaders? Because quantum and 5G are not isolated technologies. They are further enablers of transformation. Together, they unlock capabilities like:

- Ultra-fast problem solving: Quantum algorithms can optimize supply chains, financial models, and drug development in ways classical computing never could

.

- Edge intelligence: 5G allows AI to work at the edge, reducing latency and enabling real-time decision-making for IoT devices and autonomous systems

.

- Immersive experiences: AR/VR training and remote collaboration become seamless when bandwidth and computer power converge

.

Implications for business:

- Industries like healthcare and manufacturing will see quantum-driven efficiency first

.

- 5G is not just telco infrastructure, it is the enabler for edge AI and real-time analytics

.

- Leaders should ask: “are our systems ready for a world where latency is measured in milliseconds”?

Sustainability: From Compliance to Competitive Advantage

Climate-driven innovation is no longer a checkbox exercise. It is a growth strategy, and in 2025, it became undeniable.

Boards and CEOs are no longer asking “How do we meet regulations?” They are asking, “How do we turn sustainability into a lever for growth?” The shift is clear: green energy, AI-enabled resource optimization, and smart materials are not side projects. They are core to competitive positioning.

Companies are redesigning supply chains to minimize waste and carbon impact. AI is being deployed to predict resource needs, improve energy consumption, and even model climate risks. Circular models, — where products and materials are reused rather than discarded, — are moving from niche to mainstream.

Why? Because sustainability now drives investor confidence, customer loyalty, and regulatory resilience. In other words, it’s not just about doing good. It is about staying in business.

Here is what leaders need to consider:

- Sustainability metrics will sit alongside financial KPIs

.

- AI will play a critical role in predictive resource management

.

- The winners will be those who see sustainability as innovation, not obligation

.

The question is not whether sustainability matters. It is whether you are ready to make it a source of resilience and growth.

Public Sector Cloud Strategy: Australia Steps Up

Government cloud adoption accelerated in 2025. Australia is setting some benchmarks for others in the region to follow. The drivers? Security, citizen experience, and operational agility in an era where trust and transparency are non-negotiable.

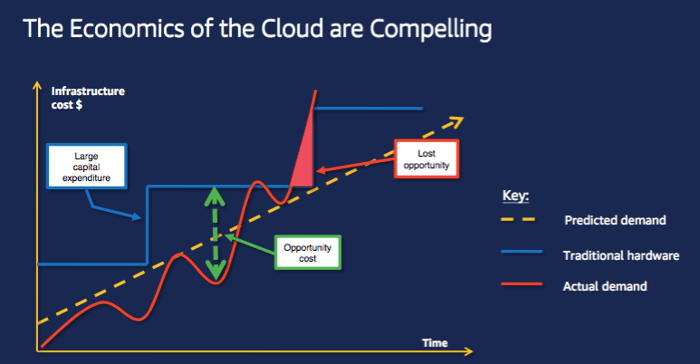

Public sector leaders are moving beyond simple lift-and-shift migrations. Instead, they are embracing cloud-native strategies that enable real-time data sharing, AI-driven service delivery, and resilience against cyber threats. The changing IT landscape is enabling modernization that was not cost-effective in the past.

Is there any urgency? Rising citizen expectations for digital services, combined with regulatory pressures and the need for cost efficiency, have made cloud a bedrock of public sector innovation. Agencies are using multi-cloud environments to ensure flexibility and compliance, while beginning to embed AI to add reasoning, such as personalizing services and predicting demand.

For businesses working with government, this shift means higher expectations for:

- Compliance and security: Vendors must meet stringent standards for data sovereignty and privacy

.

- Interoperability: Solutions need to integrate seamlessly across platforms and agencies

.

- Ethical AI: As AI becomes central to service delivery, transparency and fairness are critical

.

The takeaway? Cloud isn’t just infrastructure.; Iit’s the backbone of digital trust. And in the public sector, trust is everything.

Industry Signals: Why These Moves Matter

- Microsoft’s Humanist Superintelligence: Ethical AI is a strategic differentiator

.

- Google Cloud’s $1B AI Deals: Proof that enterprises are betting big on AI-driven platforms

.

- Palantir’s Hypergrowth: AI is becoming the operating system for modern business

.

Looking Ahead: What Will Define 2026?

If 2025 was about acceleration, 2026 may be about integration and governance. Expect three big themes:

- AI Governance: Balancing autonomy with accountability

.

- Quantum Commercialization: Moving from pilots towards production

- Climate-Tech Scaling: Turning sustainability into a measurable impact

.

Because in business today, adaptability isn’t optional.

Resources