Benefits of Infrastructure-as-Code and Cloud Economics

As I see customers adopt Amazon Web Services, one of the first benefits they quickly realise is the ability to create and bootstrap environments at a time that suits them. This is a great benefit that helps to: (1) manage costs; and, (2) enable experimentation of new ideas. It appeals from both a financial perspective and an engineering perspective. With this foundational capability in hand, an organisation can build on it to gain further benefits. For example, accelerating product development to gain a competitive advantage.

Environments in Traditional Data Centres

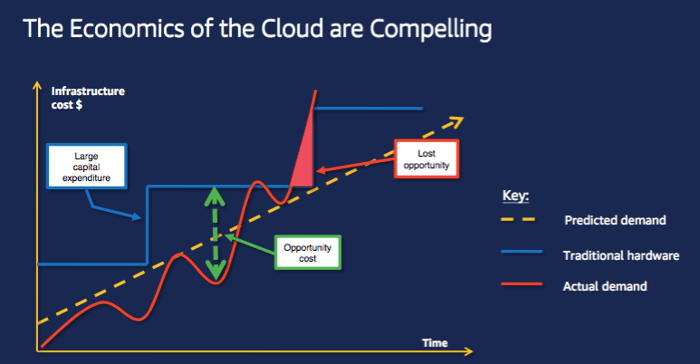

In a traditional data centre we would typically see a dev | test | prod | dr type approach to defining non-production (development and test) and production (prod and disaster recovery) environments. The infrastructure for these environments would be purchased at a high cost. Then it would often be written down, for example over a typical 3-5 year hardware refresh cycle. Guesses would be made to estimate capacity in advance of equipment purchase, and proof-of-concept work would typically occur just-in-time of purchase. Proof-of-concept in a hardware refresh cycle might trial and prove new application architectures at that time, perhaps not to be revisited until the next refresh.

Environments in AWS Cloud

Thank goodness we’re no longer confined to traditional data centres! With Amazon Web Services, you can create infrastructure and services without paying any upfront purchase costs. You pay for what you use, when you use it. What’s more (and even better), when you are finished you can destroy the infrastructure and services you provisioned and no further costs are incurred. (Note of course I’m not suggesting you destroy your production environments here, but highlighting the lifecycle capability of provisioning environments in cloud).

Run a proof-of-concept whenever you want! Trial adoption of database-as-a-service like Amazon Relational Database Service (RDS) to reduce your database administration costs and improve service availablity! Introduce high-availability and self-healing compute infrastructure, with Amazon Elastic Load Balancing across Availability Zones and EC2 Auto Scaling!

Why Does It Matter?

Cloud providers such as Amazon Web Services have heralded changes that are nothing short of revolutionary. These changes contribute to the widely acknowledged current technological revolution – the Fourth Industrial Revolution. Globally we have seen the concept of cloud economics introduced to organisations and rapidly adopted. There’s now a more level playing field between smaller organisations and larger ones, which is accelerating innovation, disruptive ideas and products.

Underlying digital agility, innovation and productivity is IaC. Infrastructure-as-Code. IaC is a foundational capability of agile digital organisations. Using IaC you write the programming code to create your infrastructure and services. Once the code is written, the process is effectively automated.

Amazon Web Services provides CloudFormation and the Cloud Development Kit (CDK) for IaC.

Why use a human to do dumb, repetitive tasks? Automate them and boost your operational efficiency. Once you have your infrastructure code in hand, build a DevOps pipeline to manage the process of provisioning.

Foundational DevOps relies on IaC.